Adding speech recognition to your Jetpack Compose Android app — The Minimum Effort Guide

NB — pretty much the entirety of the content (or the inspiration of it) of this post comes from this Youtube video by Indently. Give them the wonderful credit please (and if you’re still using Views, take a look at the vid!). All I did was update it to Jetpack Compose.

What you’ll have by the end of this post

An app with button that allows you to launch Google’s speech recognition and then returns to the app the resulting speech-to-text, which is displayed in the text on the screen.

But I want it now

OK fine, for those of you who just want grabby-grab, here’s the final code up-front. If you need it, the detail comes after…

package de.chrisward.googlespeechrecognitionprototype

import android.app.Activity

import android.content.Intent

import android.os.Bundle

import android.speech.RecognizerIntent

import androidx.activity.ComponentActivity

import androidx.activity.compose.rememberLauncherForActivityResult

import androidx.activity.compose.setContent

import androidx.activity.enableEdgeToEdge

import androidx.activity.result.contract.ActivityResultContracts

import androidx.compose.foundation.layout.Arrangement

import androidx.compose.foundation.layout.Column

import androidx.compose.foundation.layout.Spacer

import androidx.compose.foundation.layout.fillMaxSize

import androidx.compose.foundation.layout.padding

import androidx.compose.material3.Button

import androidx.compose.material3.Scaffold

import androidx.compose.material3.Text

import androidx.compose.runtime.Composable

import androidx.compose.runtime.mutableStateOf

import androidx.compose.runtime.remember

import androidx.compose.ui.Alignment

import androidx.compose.ui.Modifier

import androidx.compose.ui.tooling.preview.Preview

import androidx.compose.ui.unit.dp

import de.chrisward.googlespeechrecognitionprototype.ui.theme.GoogleSpeechRecognitionPrototypeTheme

import java.util.Locale

class MainActivity : ComponentActivity() {

override fun onCreate(savedInstanceState: Bundle?) {

super.onCreate(savedInstanceState)

enableEdgeToEdge()

setContent {

GoogleSpeechRecognitionPrototypeTheme {

Scaffold(modifier = Modifier.fillMaxSize()) { innerPadding ->

MainScreen(modifier = Modifier.padding(innerPadding))

}

}

}

}

}

@Composable

fun MainScreen(modifier: Modifier = Modifier) {

val speechText = remember { mutableStateOf("Your speech will appear here.") }

val launcher = rememberLauncherForActivityResult(ActivityResultContracts.StartActivityForResult()) {

if (it.resultCode == Activity.RESULT_OK) {

val data = it.data

val result = data?.getStringArrayListExtra(RecognizerIntent.EXTRA_RESULTS)

speechText.value = result?.get(0) ?: "No speech detected."

} else {

speechText.value = "[Speech recognition failed.]"

}

}

Column(modifier = modifier

.fillMaxSize(),

horizontalAlignment = Alignment.CenterHorizontally,

verticalArrangement = Arrangement.Center

) {

Button(onClick = {

val intent = Intent(RecognizerIntent.ACTION_RECOGNIZE_SPEECH)

intent.putExtra(RecognizerIntent.EXTRA_LANGUAGE_MODEL, RecognizerIntent.LANGUAGE_MODEL_FREE_FORM)

intent.putExtra(RecognizerIntent.EXTRA_LANGUAGE, Locale.getDefault())

intent.putExtra(RecognizerIntent.EXTRA_PROMPT, "Go on then, say something.")

launcher.launch(intent)

}) {

Text("Start speech recognition")

}

Spacer(modifier = Modifier.padding(16.dp))

Text(speechText.value)

}

}And here’s the not so tldr…

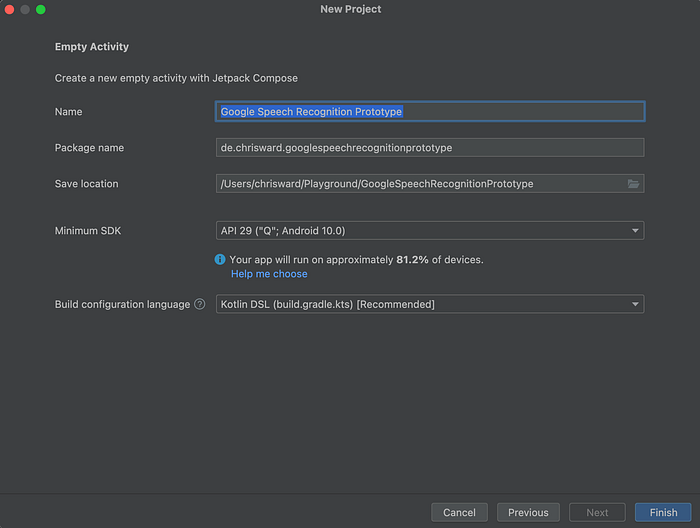

Create a new project in Android Studio

- Choose Empty Activity and select Next.

- Call it what you like, but I’m calling it Google Speech Recognition Prototype and I’m setting a Min SDK of API 29 (Android 10). Then press Finish.

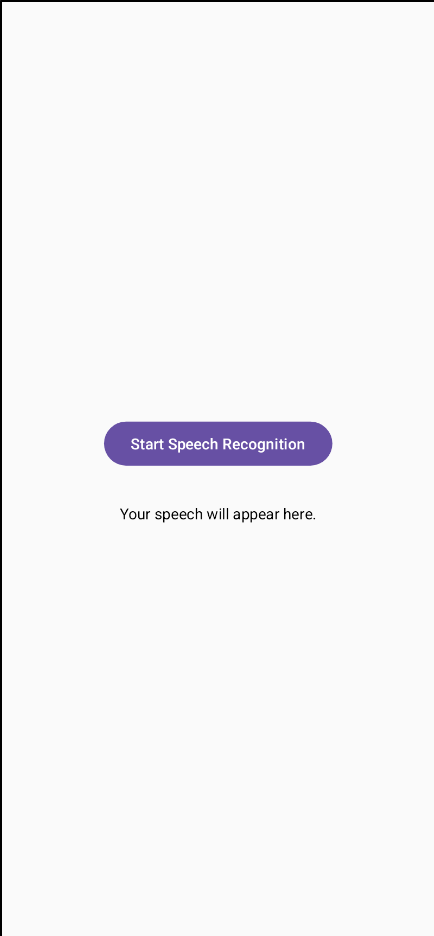

Create your screen

We want a simple screen with a vertically and horizontally-centred Button and Text component. The button launches speech recognition, and the text displays the resulting text afterwards.

Let’s create a Composable for the screen called MainScreen. In it, we’ll have a Column with the Button and the Text centre-aligned…

@Composable

fun MainScreen(modifier: Modifier = Modifier) {

val speechText = remember { mutableStateOf("Your speech will appear here.") }

Column(modifier = modifier

.fillMaxSize(),

horizontalAlignment = Alignment.CenterHorizontally,

verticalArrangement = Arrangement.Center

) {

Button(onClick = {}) {

Text("Start speech recognition")

}

Spacer(modifier = Modifier.padding(16.dp))

Text(speechText.value)

}

}Nothing special here — just a column with a button and a text component. The text component makes use of a MutableState speechText variable towards the top, as we expect this to change depending on what is returned from speech recognition.

Don’t forget to change your onCreate method to use this MainScreen Composable instead of the built in Greeting:

class MainActivity : ComponentActivity() {

override fun onCreate(savedInstanceState: Bundle?) {

super.onCreate(savedInstanceState)

enableEdgeToEdge()

setContent {

GoogleSpeechRecognitionPrototypeTheme {

Scaffold(modifier = Modifier.fillMaxSize()) { innerPadding ->

MainScreen(modifier = Modifier.padding(innerPadding))

}

}

}

}

}Create the launcher for Android’s built-in speech recognition

Previously one would have done this with startActivityForResult(), but this has now been deprecated. The up-to-date way of launching a different activity with the expectation of something being returned afterwards is using rememberLauncherForActivityResult. We set it up towards the top of the Composable and we only launch it with the specific Intent upon the button press, as follows:

val launcher = rememberLauncherForActivityResult(ActivityResultContracts.StartActivityForResult()) {

if (it.resultCode == Activity.RESULT_OK) {

val data = it.data

val result = data?.getStringArrayListExtra(RecognizerIntent.EXTRA_RESULTS)

speechText.value = result?.get(0) ?: "No speech detected."

} else {

speechText.value = "[Speech recognition failed.]"

}

}Here we are simply stating what we wish to happen when Google’s speech recogniser (or indeed any other Intent launched from launcher should do with the result.

First we check to see if the speech recognition was a success with by checking:

if (it.resultCode == Activity.RESULT_OK) {

..

}Otherwise we change our text to show an error message.

We then extract the speech text from the data returned to us specifying that we want information from Recognizer. If no data is present (e.g. if the user didn’t provide any speech or just pushed the back button immediately) this will be empty, so we state that no speech was detected.

val data = it.data

val result = data?.getStringArrayListExtra(RecognizerIntent.EXTRA_RESULTS)

speechText.value = result?.get(0) ?: "No speech detected."And here it is in the context of the rest of the code:

@Composable

fun MainScreen(modifier: Modifier = Modifier) {

val speechText = remember { mutableStateOf("Your speech will appear here.") }

val launcher = rememberLauncherForActivityResult(ActivityResultContracts.StartActivityForResult()) {

if (it.resultCode == Activity.RESULT_OK) {

val data = it.data

val result = data?.getStringArrayListExtra(RecognizerIntent.EXTRA_RESULTS)

speechText.value = result?.get(0) ?: "No speech detected."

} else {

speechText.value = "[Speech recognition failed.]"

}

}

Column(modifier = modifier

.fillMaxSize(),

horizontalAlignment = Alignment.CenterHorizontally,

verticalArrangement = Arrangement.Center

) {

Button(onClick = {}) {

Text("Start speech recognition")

}

Spacer(modifier = Modifier.padding(16.dp))

Text(speechText.value)

}

}This is all fine and dandy, but it does nothing until we actually launch something with the launcher. So let’s do that…

Launch the launcher

If you’ve used startActivityForResult() before this will look pretty similar to you. In the new Android world, you simply call the launcher’s launch method with the Intent you want to launch in much the same way you’d have done before calling startActivityForResult() in the past.

Button(onClick = {

val intent = Intent(RecognizerIntent.ACTION_RECOGNIZE_SPEECH)

intent.putExtra(RecognizerIntent.EXTRA_LANGUAGE_MODEL, RecognizerIntent.LANGUAGE_MODEL_FREE_FORM)

intent.putExtra(RecognizerIntent.EXTRA_LANGUAGE, Locale.getDefault())

intent.putExtra(RecognizerIntent.EXTRA_PROMPT, "Go on then, say something.")

launcher.launch(intent)

}) {

Text("Start speech recognition")

}All we’re doing here within the button’s onClick lambda is creating an intent stating we want to use SpeechRecognizer, then specifying that we want to use the free-form language model (based on free-form speech recognition as opposed to web search terms), specifying the language to be the device’s default locale, and optionally sending a prompt. Then we call the launch method of our previously created launcher.

And as before, here’s that code snippet in the context of the rest of the code.

@Composable

fun MainScreen(modifier: Modifier = Modifier) {

val speechText = remember { mutableStateOf("Your speech will appear here.") }

val launcher = rememberLauncherForActivityResult(ActivityResultContracts.StartActivityForResult()) {

if (it.resultCode == Activity.RESULT_OK) {

val data = it.data

val result = data?.getStringArrayListExtra(RecognizerIntent.EXTRA_RESULTS)

speechText.value = result?.get(0) ?: "No speech detected."

} else {

speechText.value = "[Speech recognition failed.]"

}

}

Column(modifier = modifier

.fillMaxSize(),

horizontalAlignment = Alignment.CenterHorizontally,

verticalArrangement = Arrangement.Center

) {

Button(onClick = {

val intent = Intent(RecognizerIntent.ACTION_RECOGNIZE_SPEECH)

intent.putExtra(RecognizerIntent.EXTRA_LANGUAGE_MODEL, RecognizerIntent.LANGUAGE_MODEL_FREE_FORM)

intent.putExtra(RecognizerIntent.EXTRA_LANGUAGE, Locale.getDefault())

intent.putExtra(RecognizerIntent.EXTRA_PROMPT, "Go on then, say something.")

launcher.launch(intent)

}) {

Text("Start speech recognition")

}

Spacer(modifier = Modifier.padding(16.dp))

Text(speechText.value)

}

}All we’re doing here within the button’s onClick lambda is creating an intent stating we want to use SpeechRecognizer, then specifying that we want to use the free-form language model (based on free-form speech recognition as opposed to web search terms), specifying the language to be the device’s default locale, and optionally sending a prompt. Then we call the launch method of our previously created launcher.

And that’s it…

This is the lowest effort way of introducing speech recognition into your app. If all you want is text-to-speech and you don’t mind Google’s UI, this’ll do the job!